Trust Series: Part 1

Trust – the non-negotiable in AI-driven experiences

Imagine opening your banking app only to discover your loan application was denied, offering nothing more than an “AI-based decision”—or realising your health insurance premium just shot up because an algorithm tagged you as high-risk. These aren’t far-fetched “what-ifs” anymore; as artificial intelligence moves from simple recommendations into high-stakes territories like finance and healthcare, the absence of transparency can erode trust and jeopardise customer loyalty.

Against this backdrop, trust has emerged as a non-negotiable currency. Renowned digital identity authority Dave Birch reminds us that “identity is the new money,” underscoring how personal data can make or break an organisation. Sarah Gold, a trust champion, emphasises embedding consent, clarity, and data ownership into AI services—rather than treating them as afterthoughts. Meanwhile, internet pioneer Doc Searls pushes for a future where individuals, not corporations, dictate how data is shared, echoing the principles of VRM (Vendor Relationship Management).

Layer emerging regulations such as the EU AI Act, GDPR, and the UK Data Protection Bill on top of rising public demand for ethical technology, and it’s clear: trust is no mere buzzword. This article explores the rising necessity of trust in AI-driven experiences, examines where trust has been built or broken in real-world use cases, and outlines a path for businesses ready to embrace responsible innovation—without sacrificing commercial success.

What do we mean by “Trust” in digital?

Trust in digital interactions goes beyond basic security. It encompasses transparency, reliability, privacy, and user-centric design. Think of trust as the confidence that a service will handle personal data responsibly, respect consent, and make decisions aligned with user interests.

Sarah Gold has long championed “user rights by design”, arguing that systems should embed meaningful consent controls from the ground up.

Cory Doctorow, an advocate for open access and digital freedom, has long warned of “enshittification”—a process where platforms degrade user experience over time to maximise profits. Being able to move from said platforms, is a whole other article.

No longer is it enough to ask, “Does this platform do what I need?” Now we need to ask, “Can I rely on it to do what’s best for me—and on whose terms?”

Why now?

- Increased complexity

AI models aren’t just recommending movies; they’re now influencing finance, healthcare, and critical social services. This increases their impact and potential for harm. Everyone’s excited about what could be, but not enough are thinking about the unforeseen consequences.

- Regulatory momentum

The EU AI Act and other bills worldwide push for transparent, fair AI. Meanwhile, GDPR ensures data is appropriately safeguarded. Together, these underscore the necessity of robust user protections. The coalescence of seemingly unconnected legislation creates a complex environment going forward.

- Evolving user expectations

People have grown more aware of data misuse and “black box” algorithms. Doc Searls highlights the importance of “pull-based” relationships, where the user is in control rather than a platform exploiting user data for profit. Fundamentally, A handful of companies colonised web 2.0, and ideas like “if you’re not paying, you’re the product” became normalised.

How It Works: Building—or eroding—trust

Building trust

- Transparency: Clearly communicate how AI systems make decisions. If a service suggests a bank loan, for instance, explain the main criteria—like income thresholds or credit score requirements—without revealing proprietary algorithms.

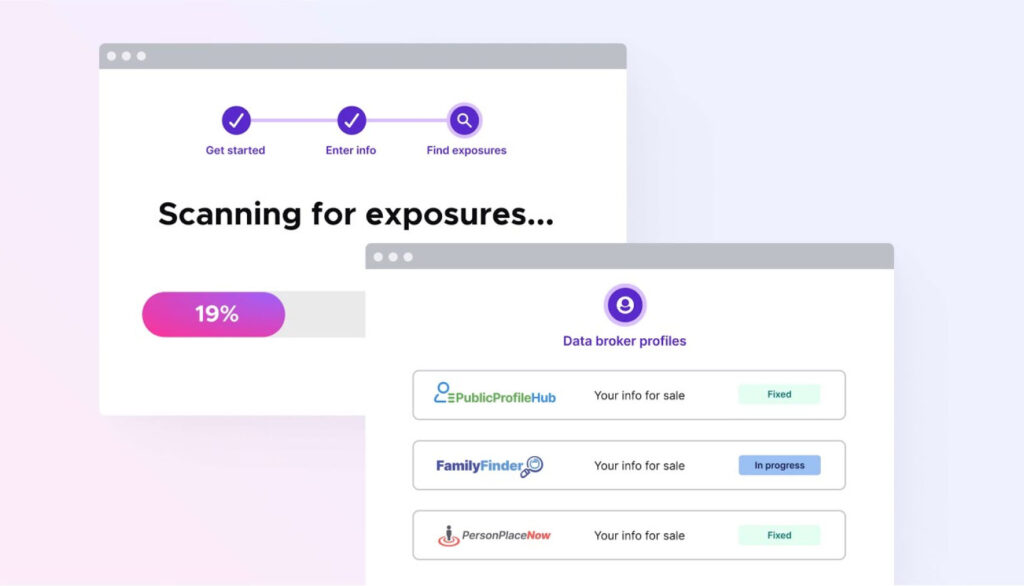

- Privacy and control: Respect the principle that users own their data. Provide intuitive dashboards or consent toggles to decide what is stored, shared, or sold. Gold’s work demonstrates that even complex AI systems should be more user-friendly and consent-driven.

- Reliability & fairness: AI must minimise bias. Dr Joy Buolamwini—founder of the Algorithmic Justice League and MIT Researcher—illustrates how facial recognition systems can fail marginalised communities if trained on biased datasets. Recognising and fixing such issues is critical to maintaining user confidence. If you haven’t seen it, Dr. Joy’s SXSW address is well worth your time.

Example: Mozilla’s Privacy Tools

Mozilla, the organisation behind Firefox, consistently emphasises how it handles user data, offering straightforward privacy controls and explanations. This transparent approach helps Mozilla retain credibility even as the browser market becomes more competitive.

Eroding Trust

- Data breaches and opaque policies: A single breach can irreparably harm user confidence, especially if people never knew such data was being collected.

- Manipulative interfaces: Doctorow often criticises “dark patterns” designed to trick users into sharing more data or consenting to undesirable terms.

- Unclear accountability: When users can’t tell who’s responsible for AI decisions—especially in high-stakes contexts like credit approvals or medical advice—they’re unlikely to trust the service for long.

Example: Cambridge Analytica

This scandal exposed the covert harvesting of Facebook user data for political microtargeting, eroding trust in social media platforms and igniting calls for stronger data protection laws. Of course, there are many others such as ClearView, but CA is probably the most high-profile example.

Benefits and use cases

User loyalty and retention

Trust is a catalyst for long-term relationships. Customers who sense genuine respect for their privacy and autonomy are more inclined to stay, advocate, and provide meaningful feedback that can enhance AI-driven services. According to the 2022 Edelman Trust Barometer, 58% of consumers will advocate for a brand they trust, while 68% refuse to use one they do not.

Regulatory alignment

Complying with UK Data Protection rules or the EU AI Act is more than just a legal necessity; it’s an ethical imperative. Jamie Smith from Customer Futures, and ‘Friend of Else’ suggests that user-centric, transparent AI solutions tend to remain well-aligned with new regulations—saving companies from costly retrofits or reputation-damaging missteps.

Innovation as differentiation

With AI becoming ubiquitous, the differentiator often lies in how it’s delivered. Let’s say that again: with AI becoming ubiquitous, the differentiator often lies in how it’s delivered.

Searls’s VRM approach and Gold’s consent-by-design frameworks suggest that ethical AI design can open new markets and foster a loyal user base that willingly shares data because they trust how it’s used. This requires a real change agent willing to explore a key emerging area for commercial advantage.

Risks and challenges

Algorithmic bias and complexity

AI can inadvertently replicate human or dataset biases if not carefully audited. Buolamwini’s research highlights failures in facial recognition for women and people of colour, an oversight that can have dire consequences in policing, finance, and beyond. Addressing bias means continually checking data sources, retraining models, and communicating limitations.

Balancing innovation with regulation

Some fear that focusing on trust and compliance will hamper innovation. In truth, building responsibly from the outset can help organisations adapt to emerging regulations, avoid rushed patchwork solutions, and maintain a consistent user experience.

Managing user expectations

Even when platforms act responsibly, AI can still cause confusion. Doctorow advocates for “plain language disclaimers,” replacing dense legalese with user-friendly explanations. Hence, people understand exactly what they’re opting into. We suppose that one of the challenges will be getting everyday folk to care about this stuff again, but here at Else, we don’t doubt that will happen.

Comparison

A quick way to illustrate the impact of trust is to compare transactional vs. trusted interactions

| Transactional Interaction | Trusted Interaction | |

| Focus | Complete a task or transaction as efficiently as possible | User-centric design, consent, and clarity |

| User Control | Minimal, with limited transparency or data options | Robust privacy settings, meaningful consent flows, and transparent AI logic |

| Outcome | Efficiency, but potential user unease or suspicion | Enhanced loyalty, user satisfaction, and brand advocacy |

Wrapping up

Trust is the new baseline. As AI shapes everything from shopping recommendations to life-altering decisions—credit, employment, and healthcare—users and lawmakers alike call for deep transparency, privacy, and ethics. It’s no longer enough to roll out AI and hope it works in everyone’s favour; these systems must demonstrably align with user well-being.

For businesses, adopting a trust-first philosophy can appear daunting. Yet it reaps major rewards: loyal users, easier compliance, and a genuine competitive edge in an AI-driven economy. Now is the time to audit your digital touchpoints, scrutinise how data is captured and leveraged, and recalibrate your design approach around trust—a principle championed by thinkers like Gold, Birch, Searls, Buolamwini, Smith, and Doctorow. Their collective wisdom underscores a shared message: a digital future that honours user rights, autonomy, and ethical innovation isn’t just possible—it’s imperative.

TL/DR

- AI’s growing influence: With AI making crucial decisions, trust is vital for confident user adoption.

- Key thinkers: Experts such as Sarah Gold, Dave Birch, Doc Searls, Dr Joy Buolamwini, Jamie Smith, and Cory Doctorow all emphasise the user-centric, ethical handling of data and AI systems. And of course, here at Else, we do too.

- Core elements of Trust: Transparency, privacy, user control, and fairness must be built into AI from day one. See our Trusted Interactions framework in a later article.

- Business benefits: Trust leads to higher loyalty, smoother regulatory compliance, and a distinctive market advantage.

- Your call to action: Examine existing AI tools and data policies, ensuring they fit a trust-first framework rather than “bolt-on” compliance. Or, if you haven’t established any AI tooling yet, bake this in from the get-go. And it goes without saying, but do give us a shout if you need help with this.

Some questions

Q: Does ‘trust’ only mean data privacy?

A: No. While privacy is critical, trust also involves explaining how AI arrives at decisions, minimising bias, and giving users genuine control over how their data is used.

Q: Might a trust-first approach slow down product development?

A: Initially, yes. But in the long run, it prevents costly rework and reputation damage, allowing for sustainable innovation.

Q: We only use basic AI. Why bother with these principles?

A: Even small AI features impact user perceptions. Embedding trust from the start prepares you for future expansions in AI capability.

Q: How do we measure ‘trust’?

A: Track user satisfaction, churn, and complaints. Some organisations adopt formal metrics—like a Trusted Interaction Score—to quantify how well they uphold transparency, user control, and privacy.

Final note

Trust isn’t a nice-to-have luxury—it’s a strategic asset and a moral responsibility. Embrace it in your AI design, and you’ll find that innovation and user well-being can thrive together, creating a resilient digital ecosystem for all.

At Else, we combine deep industry insights with practical design expertise across loyalty (O2, MGM, Shell), identity (GEN’s Midy), regulated sectors (UBS, T.Rowe Price, Boehringer-Ingelheim, Bupa), and AI innovation (Genie, Good.Engine, plus our own R&D).

Drawing on this broad experience, we’re uniquely positioned to help organisations embed trust, transparency, and user-centricity into digital product and service delivery—ensuring that the future of AI-driven experiences remains both ethical and commercially compelling.

Interested in learning more about Trust and AI?

This is the first of a six-part series exploring emergent concepts around trust and AI—vital considerations for anyone responsible for digital product/service delivery and innovation. Across these articles, we delve into frameworks, real-world examples, and thought leadership insights to help organisations design user-centric experiences that uphold privacy, transparency, and ethical autonomy. Whether you’re a designer, product manager, or executive, these perspectives will prepare you to navigate the evolving intersection of technology, regulation, and user expectations with confidence and clarity.

Else briefing

Don’t miss strategic thinking for change agents

One more step

Thanks for signing up to Else insight

We’ll only email you when we release an update.

Please check your email for a confirmation link to complete the sign up.

You’re all set

Ah! You’re already a friend of Else

You won’t miss any of our updates.

In the meantime, please enjoy Our Insights

Shared perspectives

Don’t miss strategic thinking for change agents

Find ideas at your fingertips with Else insight.

Here to help

Have a question or problem to solve?