Trust Series: Part 5

Operationalising trust: Education, feedback and improvement

Baking trust into product/service experiences isn’t a one-time shot of course, it’s a live environment. So we’re going to need to bring a bit of organisational consensus to managing trust in an ongoing capacity.

So, if you’ve embraced the pillars of trust (privacy, control, transparency) and even introduced a Trusted Interaction Score. The next challenge: embedding these ideals into your daily operations. How do you ensure designers, developers, and senior leaders live and breathe user-centric principles, rather than seeing them as a tick-box exercise?

This article explores how to operationalise trust—through education, robust feedback mechanisms, and a culture of ongoing improvement. We’ll also outline some practical steps to integrate trust into every level of your organisation.

What do we mean by operationalising trust?

Operationalising means making trust frameworks actionable. Instead of one-time workshops or last-minute “compliance checks,” trust becomes part of every design sprint, user story, and technical roadmap. As Doctorow suggests: if user rights and data sovereignty aren’t systematically reinforced, they tend to get forgotten in the rush to meet deadlines and KPIs.

Why It Matters

- Avoiding ‘checkbox’ culture

Merely ticking privacy or consent boxes can lead to a false sense of security—and backlash if issues arise.

- Regulatory readiness

Laws like the EU AI Act can evolve. By building flexible, trust-centric processes now, you’re better prepared for future mandates.

- Long-term loyalty

Trust begets loyalty. Dave Birch reminds us that when users feel safe sharing credentials and personal data, more meaningful interactions (and commerce) flourish. This is going to be HUGE going forward, so get on the Empowerment Tech train… all aboard!

Education & training

- Regular workshops: Provide sessions on ethical AI, data privacy, and user consent for product managers, designers, and developers.

- Onboarding: New hires should learn about your trust principles as early as possible—so it’s part of their mindset, not an afterthought.

- Executive buy-In: Leadership teams must champion trust as integral to company success. Dr Joy Buolamwini highlights how bias can persist if there’s insufficient top-down commitment to ethical standards.

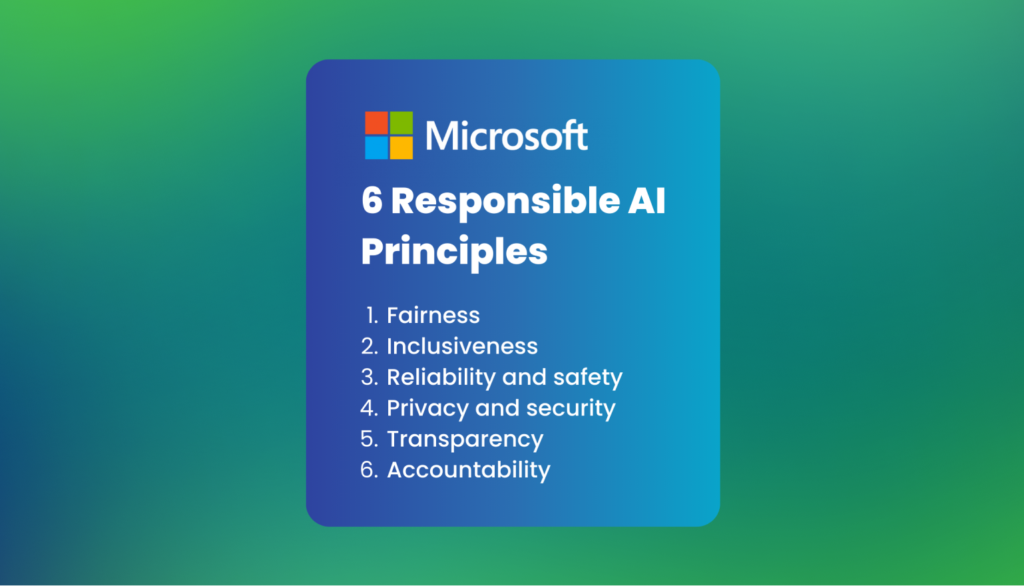

Real-Life Example – Microsoft’s AI Ethics Training

Microsoft has publicised its Responsible AI principles and trains its teams on fairness, transparency, and privacy. By formalising these values, they move beyond lip service.

Feedback mechanisms

Searls argues that user involvement shouldn’t stop at sign-up. Actively solicit feedback on how users feel about your AI’s decisions, data usage, and consent flows:

- User panels: Invite power users to beta-test new features, focusing on privacy controls and consent clarity. I mean, it’s an old-school idea, but hey.

- In-app feedback: Offer a one-click system for users to flag uncomfortable interactions or AI decisions. Believe it or not, but we’ve worked with many organisations that really struggle to do this well, but it isn’t hard to do properly, if you actually care.

- Surveys & Forums: Periodically gauge user trust levels—possibly integrating these insights into your TIS (from Article 4; Measuring trust: Introducing Else’s Trusted Interaction Score (TIS)).

Real-Life Example – Slack’s Feature Iteration

Slack frequently tests new features with selected user groups, refining based on real-world feedback. While not purely about AI, the principle of integrated user input to shape final features remains key to trust-building.

Continuous improvement cycles

Borrowing from Gold: treat trust as an iterative design challenge, not a one-off fix.

- Trust retrospectives: After each sprint or release, evaluate how well new features align with trust pillars (privacy, control, transparency). We love this and can think of other themed retros that should be a common mainstay for teams.

- Cross-functional teams: Involve legal, design, and engineering from the outset. If privacy is only the legal team’s domain, it won’t get due consideration in UX. Again, we’ve seen this cross-functional collaboration bring huge gains especially when working in regulated environments. It never ceases to amaze us how many design/dev/legal teams that haven’t met IRL.

- Regular TIS updates: If you’re using a Trusted Interaction Score, recalculate it after major changes. Publicly discuss improvements or declines to instil accountability.

Benefits and use cases

Organisational alignment

When trust is operationalised, everyone speaks the same language—engineers, marketing, product leads. This alignment reduces internal friction and last-minute compliance emergencies.

Early detection of red flags

User complaints or bug reports on AI transparency aren’t overlooked because there’s an established channel for addressing them. Jamie Smith emphasises that agile responses to user concerns can prevent reputational issues later.

A culture of innovation

Contrary to fears that trust stifles creativity, embedding it from day one can foster more innovative solutions. Dave Birch contends that strong digital identity frameworks free up companies to build smarter features without worrying about data misuse allegations down the line. Plus, as interactions increase in complexity with the advent of things like Dynamic Consent, it’s more than the right thing to do if you care about your customers.

Risks and challenges

Change management

Teams may resist new processes or see “trust tasks” as hurdles. Overcome this by showcasing success stories—like how focusing on user privacy decreased churn or boosted brand perception.

Resource allocation

Embedding trust in day-to-day ops requires time, budget, and skilled personnel. Some might argue it’s too costly, but Doctorow warns that ignoring user agency can lead to more severe backlash and lost user loyalty.

Scaling issues

What works for a 20-person startup may not directly translate to a 10,000-person enterprise. Dr Joy Buolamwini’s bias audit frameworks show the importance of tailoring trust initiatives to data complexity and team size. This requires further thought.

Comparison

| Trust as One-Off Initiative | Operationalised Trust | |

| Focus | Quick compliance fixes, sporadic user feedback | End-to-end integration: training, feedback loops, continuous improvement |

| Team Engagement | Limited to privacy/legal teams | Cross-functional: everyone owns trust goals |

| Outcome | Check-box solutions, potential user distrust over time | Sustainable culture of ethical design and user loyalty |

Wrapping up

Operationalising trust requires concerted effort—education, feedback loops, and continuous improvement are the keystones. We can see that bridging the gap between aspirational ethics and real-world practice is both challenging and eminently doable.

When trust initiatives extend beyond policy statements to shape how teams collaborate, plan features, and respond to user issues, you nurture a long-term bond with your audience. It’s a bond founded not on forced consent or hidden data harvesting, but on genuine respect and ongoing dialogue.

TL/DR

- Operationalising: Move from theoretical trust principles to daily workflows and team culture.

- Education & training: Regular workshops and onboarding ensure everyone knows the “why” and “how” of user-centric design.

- Feedback loops: Gather user input continuously, adjusting as you go rather than post-crisis.

- Continuous improvement: Run trust retrospectives and keep your TIS updated to identify strengths and weaknesses.

Your call to action: Start building trust into every sprint, meeting, and milestone—turn it into a living practice, not an afterthought. Maybe reach out if you are interested in a Trust Sprint with the folks from Else.

Some questions

Q: How do we keep senior leadership committed?

A: Regularly share trust metrics (like TIS) and user feedback. Demonstrate that trust is a key differentiator and a compliance safeguard.

Q: Isn’t this approach too ‘heavy’ for a fast-moving startup?

A: Early adoption of trust principles can prevent future rework and PR disasters. Smaller teams often pivot more easily once they bake trust into their workflow.

Q: Should we dedicate a department to “trust”?

A: Some larger companies do, but cross-functional collaboration is essential. A siloed “trust team” can’t fix structural issues if not integrated into product roadmaps.

Q: Does operationalising trust stifle monetisation?

A: Not necessarily. By building a reputation for ethics, you can attract more loyal users and strategic partnerships, potentially offsetting any short-term data-collection restrictions.

Final note

Trust isn’t a static box-ticking exercise—it’s a living, evolving relationship with your users. Operationalise it through education, open feedback loops, and iterative improvements, and you’ll forge a brand identity that not only complies with regulations but also stands out in a digital ecosystem hungry for genuine, user-centric innovation.

At ELSE, we combine deep industry insights with practical design expertise across loyalty (O2, MGM, Shell), identity (GEN’s Midy), regulated sectors (UBS, T. Rowe Price, Boehringer-Ingelheim, Bupa), and AI innovation (Genie, Good.Engine, plus our own R&D).

Drawing on this broad experience, we’re uniquely positioned to help organisations embed trust, transparency, and user-centricity into digital product and service delivery—ensuring that the future of AI-driven experiences remains both ethical and commercially compelling.

Interested in learning more about designing for Trust?

This is the fifth article in a six-part series exploring emergent concepts around trust and AI—vital considerations for anyone responsible for digital product/service delivery and innovation. Across these articles, we delve into frameworks, real-world examples, and thought leadership insights to help organisations design user-centric experiences that uphold privacy, transparency, and ethical autonomy. Whether you’re a designer, product manager, or executive, these perspectives will prepare you to navigate the evolving intersection of technology, regulation, and user expectations with confidence and clarity.

Else briefing

Don’t miss strategic thinking for change agents

One more step

Thanks for signing up to Else insight

We’ll only email you when we release an update.

Please check your email for a confirmation link to complete the sign up.

You’re all set

Ah! You’re already a friend of Else

You won’t miss any of our updates.

In the meantime, please enjoy Our Insights

Shared perspectives

Don’t miss strategic thinking for change agents

Find ideas at your fingertips with Else insight.

Here to help

Have a question or problem to solve?