Trust Series: Part 4

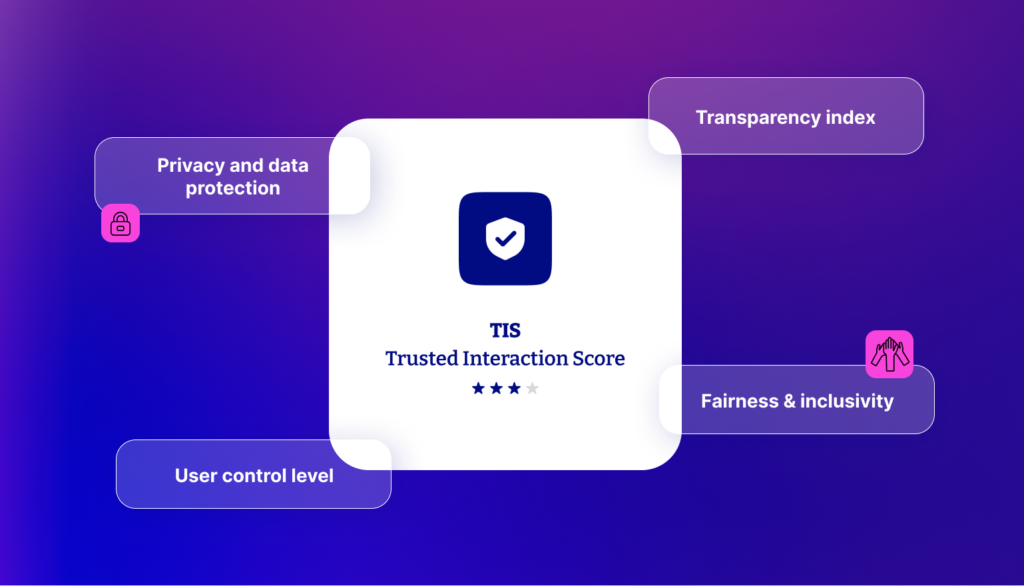

Measuring trust: Else’s Trusted Interaction Score

How does it go? What gets measured gets managed? As proponents of Design Effectiveness we’re all for a measurement framework that communicates clear commercial value generated by considerate design decisions.. So let’s apply this thinking to an important emergent area; Trust.

“How do we know our users actually trust us?” This question resonates with any organisation that has worked hard to build transparency, privacy, and ethical AI practices. Relying on subjective user feedback or sporadic surveys can be imprecise. Enter Else’s Trusted Interaction Score (TIS)—a structured, data-driven way to quantify how well a product or service adheres to trust principles.

Informed by the philosophies of people we consider thought leaders in the trust, identity and privacy space, TIS aims to turn an often fuzzy concept—trust—into actionable metrics. This article outlines why TIS matters, how to build it, and which components every organisation should measure if they’re serious about maintaining user confidence in an AI-driven world.

Definition & context

What is TIS?

The Else Trusted Interaction Score is a composite metric that evaluates products or services across multiple trust dimensions. Think of it as a “credit score” for user-centric design. Jamie Smith of Customer Futures emphasises the importance of quantitative benchmarks in identifying blind spots and highlighting successes in building trust. Similarly, Dr. Joy Buolamwini’s work with the Algorithmic Justice League demonstrates how algorithmic auditing can measure and address bias in AI systems. Components of the Trusted Interaction Score include:

-

Privacy and data protection

Evaluates how well data is safeguarded and minimised.

-

Transparency index

Measures clarity around AI usage, data handling, and user rights.

-

User control level

Assesses whether users can easily adjust permissions and revoke data access.

-

Fairness & inclusivity

Checks for steps taken to reduce bias and ensure accessibility, aligning with Dr Joy Buolamwini’s insights on underrepresented users.

Why bother quantifying trust?

Clear focus: A robust score helps leaders prioritise improvements (e.g., is the biggest gap in transparency or data security?).

Continuous improvement: TIS enables companies to track trends over time, keeping an assessment of trust across the experience in sight regularly.

Competitive differentiation: Publishing a strong TIS can set a brand apart, showing commitment to ethically minded AI—a concept Cory Doctorow frames as vital in a market often rife with “dark patterns.”

How it works: Components of the Trusted Interaction Score

A. Data protection score

Encryption & storage protocols: Are you using state-of-the-art methods for data at rest (being stored) and in transit (being shared)?

Breach response plans: Do you have transparent, user-friendly procedures if a data breach occurs? Remember, the ICO is watching.

Minimal collection principle: As Dave Birch frequently reminds us, collecting more personal data than necessary invites both technical and ethical risks. Toxic data leak anyone?

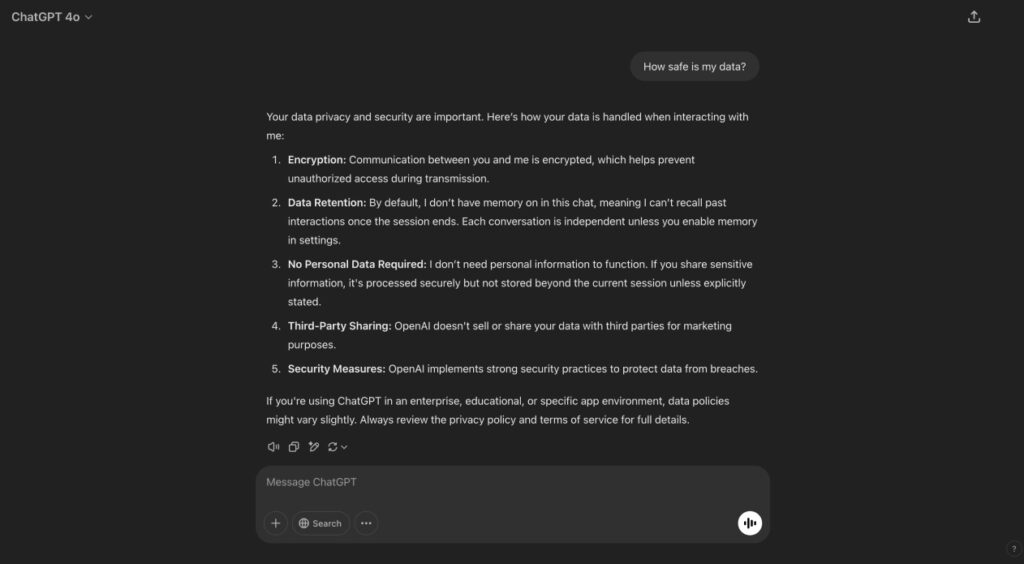

B. Transparency index

Explainable AI: Provide users with clear, jargon-free breakdowns of how decisions are made.

Readable policies: Replace “legalese” with accessible language, a la Cory Doctorow’s push for open disclosure.

Regular updates: If your AI or data policies change, do you proactively inform users?

C. User control level

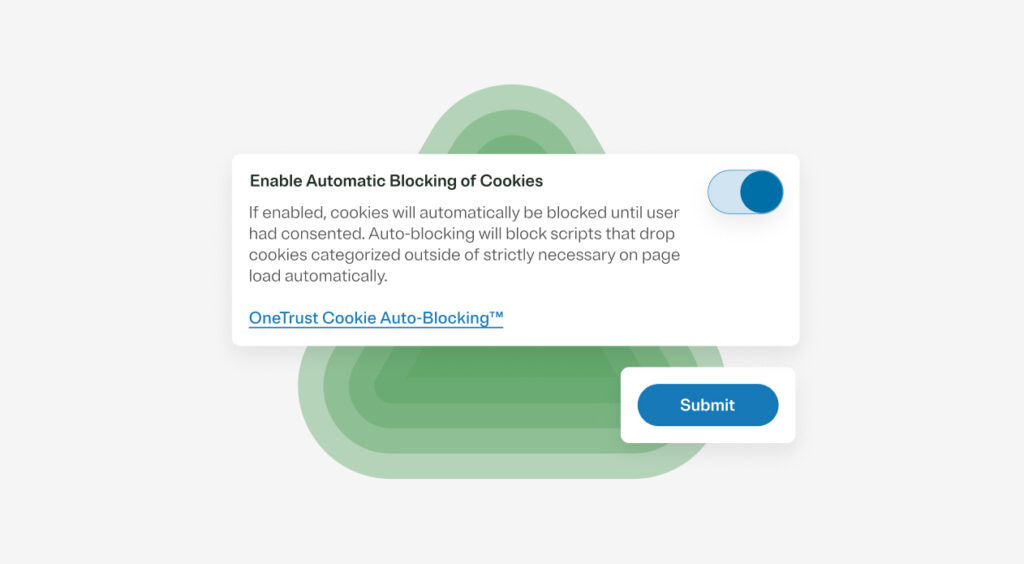

Dynamic consent capabilities: As outlined in Article 3 of this series (Dynamic consent and personal AI agents: Rethinking permissions in a world of autonomous decisions), users should be able to adjust permissions over time.

Self-serve dashboards: Offer straightforward tools to edit, export, or delete user data. Phwoar, just think of the dat-visualisations…

Tiered autonomy for AI: Borrowing from Gold’s design principles, let users decide how active or passive an AI agent can be on their behalf.

D. Fairness & inclusivity metric

Bias testing: Regularly audit AI models for demographic or data-related biases, mirroring Dr Joy Buolamwini’s research approach.

Accessible design: Ensure your platform supports assistive technologies and inclusive language.

Remediation steps: If biases or accessibility gaps are detected, is there a documented process to fix them?

Benefits and use cases

Continuous feedback loop

With a TIS in place, teams can track improvements quarter by quarter. If the User Control Level dips due to a new feature that wasn’t properly explained, for example, an immediate action plan can be implemented. Jamie Smith encourages seeing trust as iterative, just like any design or product cycle.

Regulatory alignment

Using TIS elements—data protection, transparency, user control, and fairness—naturally aligns with the EU AI Act and GDPR. Rather than scrambling to achieve compliance, TIS helps keep you consistently on track.

Public accountability

Some organisations may choose to publish their TIS, showcasing transparency and commitment to trustworthy AI. Doc Searls advocates for “radical user sovereignty,” which can be reinforced if companies declare (and then meet) measurable targets for user-centric design.

Loyalty, Brand Perception, and Commercial Impact

Trust isn’t just a compliance issue—it’s a competitive advantage. Businesses that embed transparency, user control, and ethical AI practices into their digital experiences don’t just meet regulations; they build stronger relationships with customers.

A high Trusted Interaction Score (TIS) signals to users that an organisation prioritises their autonomy and privacy, fostering brand loyalty and long-term engagement. When customers feel secure and in control, they are more likely to return, advocate for the brand, and deepen their interactions, gaining a commercial edge in an increasingly privacy-conscious market.

Risks and challenges

Over-simplification

A single numeric score can hide complexities; e.g., strong data protection might overshadow weak user control. Therefore, break down the final TIS into sub-scores to maintain nuance.

Measuring the unmeasurable

Trust also involves intangible factors—brand perception, community sentiment, and cultural expectations. A TIS can’t capture every nuance, so complement it with regular qualitative methods for understanding how target audiences feel about the CX (user interviews, focus groups).

Lack of Universal Standards

No universal “seal of approval” exists yet. Cory Doctorow warns that self-reported metrics can be gameable. Cross-industry or third-party audits (mirroring Dr Joy Buolamwini’s AI bias audits) may help standardise and validate scoring.

Comparison

| Ad Hoc ‘Trust’ Efforts | Structured TIS Approach | |

| Focus | Reactive, patchy fixes when issues arise | Proactive, consistent improvements guided by tangible metrics |

| Team Engagement | Varies by incident or complaint | Clear ownership: each pillar (data, transparency, control, fairness) has KPIs |

| Outcome | May address symptoms, not root causes | Systemic improvements; fosters a culture of trust-building |

Wrapping up

The Else Trusted Interaction Score (TIS) transforms trust from an ambiguous buzzword into a measurable, trackable framework. By evaluating data protection, transparency, user control, and fairness & inclusivity, businesses can pinpoint where they excel and where they need work. It’s an approach that echoes the steer and advice from the likes of Birch et al: building user trust isn’t a one-time task but an ongoing, evolving commitment.

Naturally, Else can help you to develop your view of trust across your digital estate, but whether you publish your TIS externally or use it internally as a barometer, the act of measuring trust pushes the entire organisation toward more ethical, user-centric product decisions. And in a world increasingly shaped by AI and data, that’s precisely the kind of focus that sets winners apart.

TL/DR

- What Is TIS?: A composite measure evaluating privacy, transparency, user control, and fairness in digital products.

- Why It Matters: Drives continuous improvement, aids regulatory compliance, and builds public accountability.

- Key Components: Data Protection Score, Transparency Index, User Control Level, and a Fairness & Inclusivity Metric.

- Benefits: Improves internal alignment, fosters loyalty, and differentiates brands that openly commit to trust.

- Your call to Action: Start designing (or adopting) Else’s TIS framework to quantify and enhance user trust in your AI ecosystem. Better still, invite us in to help you work all this out.

Some questions

Q: Is one metric enough to capture the complexity of trust?

A: Often you’ll break it into sub-scores. A single TIS can serve as an overall indicator, but detail helps isolate and fix specific issues.

Q: Should we make TIS public?

A: That’s a strategic choice. Public disclosure can foster credibility but also invites scrutiny. If you do share it, be transparent about how it’s calculated.

Q: Aren’t biases tough to measure?

A: Yes. Dr Joy Buolamwini’s work shows it’s still possible—through dataset audits and model performance reviews—to spot and mitigate bias over time.

Q: How do we keep TIS updated as our service changes?

A: Incorporate TIS checks into your development cycles, re-checking after major launches or AI updates. This ensures continuous alignment with trust objectives.

Final note

Measuring trust through TIS is an investment in both your users and your long-term success.

By making trust measurable, you invite the whole organisation—designers, developers, executives—to pull in the same direction: delivering AI-driven experiences that genuinely honour user values.

At ELSE, we combine deep industry insights with practical design expertise across loyalty (O2, MGM, Shell), identity (GEN’s Midy), regulated sectors (UBS, T. Rowe Price, Boehringer-Ingelheim, Bupa), and AI innovation (Genie, Good.Engine, plus our own R&D).

Drawing on this broad experience, we’re uniquely positioned to help organisations embed trust, transparency, and user-centricity into digital product and service delivery—ensuring that the future of AI-driven experiences remains both ethical and commercially compelling.

Interested in learning more about designing for Trust?

This is the fourth article in a six-part series exploring emergent concepts around trust and AI—vital considerations for anyone responsible for digital product/service delivery and innovation. Across these articles, we delve into frameworks, real-world examples, and thought leadership insights to help organisations design user-centric experiences that uphold privacy, transparency, and ethical autonomy. Whether you’re a designer, product manager, or executive, these perspectives will prepare you to navigate the evolving intersection of technology, regulation, and user expectations with confidence and clarity.

Else briefing

Don’t miss strategic thinking for change agents

One more step

Thanks for signing up to Else insight

We’ll only email you when we release an update.

Please check your email for a confirmation link to complete the sign up.

You’re all set

Ah! You’re already a friend of Else

You won’t miss any of our updates.

In the meantime, please enjoy Our Insights

Shared perspectives

Don’t miss strategic thinking for change agents

Find ideas at your fingertips with Else insight.

Here to help

Have a question or problem to solve?