Trust Series: Part 2

Ignoring these CX Principles is a costly mistake

With well over 25 years in this industry, we’ve been in enough brand/strategy/purpose workshops to know that while many brands want to be ‘trusted’, too few actually understand what designing trust into their product/service experience actually looks like. Well, with the advent of AI, trust is the must-have currency.

Picture this: you’re about to try a new banking app for the first time, only to be met with a labyrinth of pop-ups and data-sharing prompts. You wonder, “What are they actually doing with my information, and do I really have a choice?” In today’s digital landscape, features like privacy dashboards, transparent consent flows, and accessible AI disclosures aren’t just niceties; they’re the foundation of a trusted relationship between users and the organisations that serve them.

This article dives into four pillars—Trust, Privacy, Control, and Transparency—that ensure digital services respect user rights while fostering long-term loyalty. We weave in insights from thought leaders in trust, privacy and identity, who advocate user-centric consent in design, and who emphasise that identity is a new currency. Additionally, we’ll see how the philosophies of people like Doc Searls, Dr Joy Buolamwini, Jamie Smith, and Cory Doctorow converge on a single point: users should be the ultimate stewards of their own data.

The four pillars: a quick overview

- Trust

Users feel confident that the service acts in their best interests (when was the last time you felt that!?), safeguarding data and avoiding manipulative tactics.

- Privacy

Services respect the confidentiality of personal data, collecting only what’s essential and securing it rigorously (Same again. Last time?).

- Control

Individuals decide how their information is accessed, stored, and used—data ownership remains firmly in the user’s hands.

- Transparency

Clear, jargon-free explanations of AI processes and data usage, so people know exactly what is happening and why.

True innovation in this space is about empowering users to understand and manage their data, rather than burying disclosures in legalese or obscure interfaces. This resonates with Doc Searls’ VRM (Vendor Relationship Management) concept, which places individuals at the centre of data transactions—rather than leaving them subject to the opaque interests of big tech.

Why they matter now

- Regulatory push

Laws like the EU AI Act and GDPR demand that organisations uphold user rights and accountability for AI-driven decisions. - Consumer expectations

Jamie Smith points out that modern users have become increasingly attuned to data misuse, expecting more straightforward, honest interactions with digital services. According to Cisco’s 2022 Consumer Privacy Survey, 81% of respondents said they would spend time or money to protect their personal data, indicating that modern consumers are both aware of and concerned about data misuse. - Competitive differentiation

In a market flooded with “growth-at-all-costs” mindsets, companies offering trust-first and privacy-led experiences will stand out, building loyal communities along the way. Further still, the ‘loyalty’ landscape is shifting, but we’ll write about that another time.

“I’m not opposed to the monetization of people’s information with their informed consent, but I’m super opposed to doing it behind their backs”

—Information Doesn’t Want To Be Free, Cory Doctorow

Designing for Trust

What it means: Trust is about demonstrating genuine respect for users—no hidden fees, no sneaky data sharing. Dave Birch underscores that if an entity mismanages digital identities, it can quickly erode confidence and invite regulatory scrutiny. Scrutiny at best, action at worst.

Some considerations:

- Layered communication: Offer high-level info up front (e.g. key data policies), with detailed “learn more” sections for curious users.

- Consistent UX: Don’t surprise users with sudden or undisclosed policy shifts—any change in data usage should be front and centre.

- Open roadmaps: Engage the community in product roadmaps to show how user feedback shapes features and improvements.

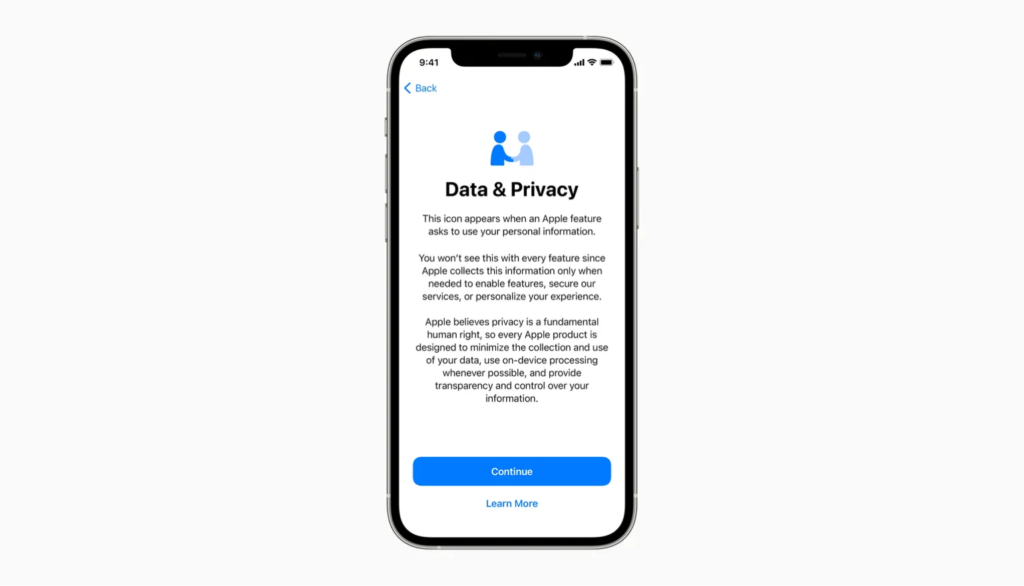

Real-Life Example – Apple’s App Privacy Labels

By presenting data usage summaries at a glance, Apple helps users see whether an app collects location data, usage stats, or personal identifiers—before they download it. This no-nonsense approach fosters a base level of trust.

Designing for privacy

What it means: Privacy ensures data is collected minimally and handled responsibly. Doctorow warns of “enshittification” where services quietly expand data collection over time, compromising privacy for profit. While we say ‘warns’, we think it’s fair to say that the evidence of products/services/platforms getting ‘shitter’ over time is quite clear.

Some considerations:

- Data minimisation: Only gather the data you truly need. Every extra piece of personal info increases both risk and user anxiety.

- Granular consent: Avoid bundling all permissions into a single “I Agree” button. Let users opt in or out of specific data categories.

- Robust security: From encryption to secure storage (potentially even decentralised models), ensure data is well-defended against breaches.

Real-Life Example – Signal Messenger

Signal’s privacy-first architecture collects minimal metadata and encrypts communications end-to-end. In doing so, it demonstrates that convenience and strong privacy can coexist.

Designing for control

What it means: Control places users in the driver’s seat—deciding how their data is accessed, stored, and shared. Doc Searls’ VRM model argues for exactly this: user-led data interactions rather than corporate-led data exploitation. Oooh, imagine.

(Aside: Doc Searls’ VRM (Vendor Relationship Management) concept inverts the traditional CRM model by giving individuals, not companies, the power to control how their data is shared. In doing so, VRM aims to foster more equitable, transparent, and mutually beneficial relationships between users and vendors. There are examples most notably in the car selling/buying space.)

Some considerations:

- User-friendly dashboards: Offer clear interfaces for managing data permissions, toggles, and account details. Often ‘My Account’ areas of product/service experiences, are after-thoughts, designed around enterprise necessary preference capture over user control and baking in trust.

- Dynamic consent: Let individuals adjust permissions over time (something we’ll explore deeper in the third article in this series).

- Multi-level autonomy (dynamic consent): If AI-driven features handle sensitive tasks—like personal finance—allow users to set thresholds (e.g., “Alert me over £100” or “Ask before investing on my behalf”)

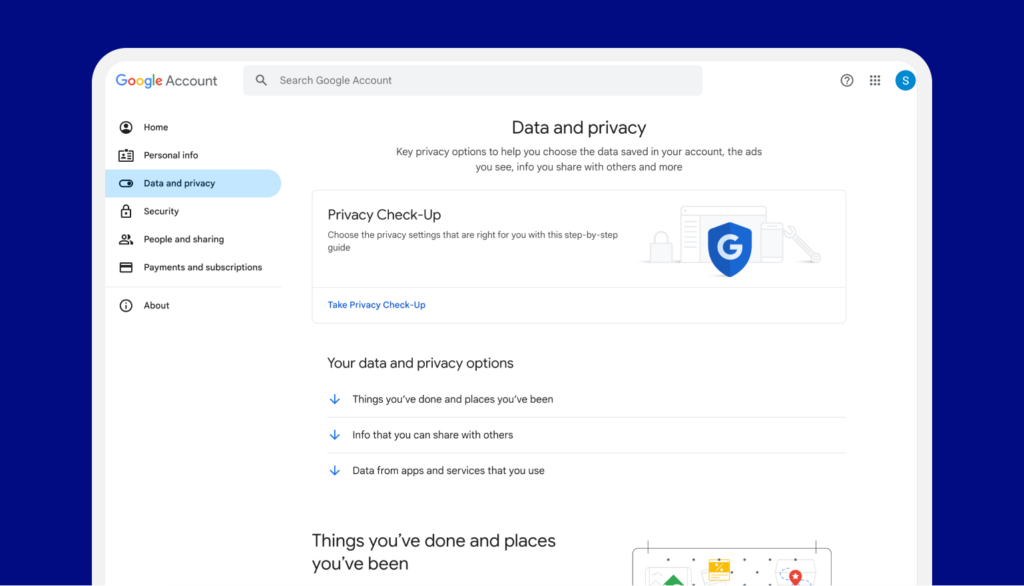

Real-Life Example – Google’s My Account

While not perfect, Google’s central dashboard attempts to unify user controls—letting individuals pause location tracking, delete search history, or manage ad personalisation. This structure at least surfaces these choices, rather than burying them.

Designing for transparency

What it means: Transparency means telling users what’s happening, why it’s happening, and how decisions are made. Dr Joy Buolamwini shows that when AI remains a “black box,” it can lead to biases going unchallenged—especially in facial recognition or credit scoring.

The bias inherent in facial recognition technologies, particularly for females with darker skin, is very well documented by Buolamwini and her not-for-profit Algorithmic Justice League. Knowing these systems are in use by a large number of US State police authorities, Border forces and other enforcement agencies in the US and EU should be a concern for us all (we’ve shared this multiple times, but here we go again – Coded Bias is a Netflix documentary by Buolamwini explores this topic in detail.

Some considerations:

- Explainable AI: Offer simple breakdowns (“Your loan application was approved because you met income and credit thresholds”).

- Open policies: When data usage or AI logic changes, communicate it early. Possibly provide knowledge bases or whitepapers for those who want deeper details.

- Visual cues: If an AI interacts with user data in real time, show a small prompt or icon, building user awareness and comfort.

Real-life example – Microsoft’s Responsible AI Resources

Microsoft publicly shares guidelines and standards for how it develops AI, demonstrating a commitment to transparent practices. This level of disclosure helps both users and partners trust they’re working with a company that adheres to ethical standards.

Why this is important

Boosting user engagement

A design that respects privacy, offers flexible consent, and explains AI’s workings can significantly increase user engagement and satisfaction. When people feel in control, they’re more apt to explore features, offer feedback, and become brand advocates.

Mitigating regulatory risks

With new regulations popping up worldwide, building for trust and transparency from the ground up helps organisations stay ahead of compliance demands, rather than rushing to retrofit their services. Jamie Smith of Customer Futures argues that meeting legal mandates should be a by-product of good design, not a last-minute hassle. Jamie, we agree.

Competitive differentiation

At a time when many platforms rely on dark patterns, manipulative interfaces, and data hoarding, offering genuine user empowerment sets you apart. This is the essence of Gold’s stance: ethics and user trust aren’t barriers—they’re market advantages. Or at least, they should be.

Risks and challenges

Overcomplicating the user experience

There’s a fine line between offering robust controls and overwhelming people with pop-ups and toggles. A strong UX approach ensures choices are intuitive but not buried in complexity.

Internal coordination

Organisations often struggle with siloed teams—design, legal, and engineering may have different priorities. Ensuring alignment around these four pillars demands clear leadership and cross-functional collaboration.

Cultural resistance

Some product teams see trust-and-privacy features as a drag on innovation or data-driven monetisation. Shifting that mindset requires championing a holistic view: trust fosters long-term success, not short-term exploitation. Honestly, this stuff should really be coming from product and technology teams anyhow.

Wrapping up

By making trust, privacy, control, and transparency foundational elements of your product/service, you cultivate experiences that feel secure, empowering, and authentically user-centric. This isn’t just about ticking legal boxes— Gold, Birch, Searls, Buolamwini, Smith, and Doctorow all underscore the moral and strategic edge in building user rights into your product DNA. Let’s add Else’s heft to that – it makes sense. It makes good business sense too.

When organisations meet (or dare we say, exceed) regulatory requirements proactively and transparently, they invite not only compliance but genuine user loyalty. Ultimately, the more you weave these four pillars into day-to-day operations, the more you shift from merely offering a service to becoming a trusted partner in your users’ digital lives.

TL/DR

- Four pillars defined: Trust, Privacy, Control, and Transparency are essential to ethical, sustainable digital services.

- Real-world benefits: Encourages stronger user loyalty, easier compliance, and deeper brand differentiation.

- Challenges: Avoid overwhelming users with complexity, align cross-functional teams, and overcome cultural resistance to shift from “data hoarding” to “data respecting.”

- Your call to action: Audit your current UX/UI for alignment with these pillars—build ethical design from the ground up rather than retrofitting at the last minute. Naturally, as ever, contact us if you need help with this

Some questions

Q: Can a product be too transparent and reveal proprietary secrets?

A: Striking a balance is key. Offer enough detail so users understand data usage and AI logic without disclosing sensitive IP.

Q: How do we avoid user fatigue from too many privacy controls?

A: Lean into intuitive interfaces and progressive disclosure. Put critical choices up front, and let users dive deeper only if they wish.

Q: Is “privacy by design” more costly or time-consuming?

A: It can require more planning initially, but it often reduces legal, reputational, and technical debts later on.

Q: What if my organisation still relies on ad revenue from user data?

A: Consider more transparent data models—like clearly stating, “We share limited browsing data to support free services.” Users prefer honesty, and many will opt in if they feel respected.

Final note

Designing for trust, privacy, control, and transparency isn’t just a one-off initiative; it’s a cultural shift. By adopting these pillars, you set a new standard for ethical innovation—one that resonates with user expectations and readies your organisation for an evolving digital future.

At ELSE, we combine deep industry insights with practical design expertise across loyalty (O2, MGM, Shell), identity (GEN’s Midy), regulated sectors (UBS, T. Rowe Price, Boehringer-Ingelheim, Bupa), and AI innovation (Genie, Good.Engine, plus our own R&D).

Drawing on this broad experience, we’re uniquely positioned to help organisations embed trust, transparency, and user-centricity into digital product and service delivery—ensuring that the future of AI-driven experiences remains both ethical and commercially compelling.

Interested in learning more about designing for Trust?

This is the second in a six-part series exploring emergent concepts around trust and AI—vital considerations for anyone responsible for digital product/service delivery and innovation. Across these articles, we delve into frameworks, real-world examples, and thought leadership insights to help organisations design user-centric experiences that uphold privacy, transparency, and ethical autonomy. Whether you’re a designer, product manager, or executive, these perspectives will prepare you to navigate the evolving intersection of technology, regulation, and user expectations with confidence and clarity.

Else briefing

Don’t miss strategic thinking for change agents

One more step

Thanks for signing up to Else insight

We’ll only email you when we release an update.

Please check your email for a confirmation link to complete the sign up.

You’re all set

Ah! You’re already a friend of Else

You won’t miss any of our updates.

In the meantime, please enjoy Our Insights

Shared perspectives

Don’t miss strategic thinking for change agents

Find ideas at your fingertips with Else insight.

Here to help

Have a question or problem to solve?