Trust Series: Part 6

Driving Standards: Benchmarking, Open Standards & Industry Collaboration

This series of articles started out exploring the idea that driven by the advent of AI in our digital products and services, that designing-in Trust around data, privacy and identity would become a competitive advantage. Now we’re at a point where we’re discussing the implications of an entire industry moving forward on this, in a user-centric way.

Imagine a world where every major digital platform follows a uniform set of trust principles—clear AI disclosures, strong privacy controls, and reliable user consent mechanisms—no matter which app or site you visit. This utopia may sound far-fetched, but a collective push for open standards and cross-industry collaboration can move us closer to a truly user-first digital ecosystem.

Building on insights from thought leaders in the trust and identity space, this article explores how standardising trust practices can benefit not just users, but entire industries. Big call.

Definition & context

Standardisation: Why bother?

Standardisation means creating industry-wide benchmarks, protocols, or guidelines that companies can follow to ensure consistent levels of trust, privacy, and control. By adopting shared frameworks (similar to how the World Wide Web Consortium sets web standards), organisations reduce fragmentation and user confusion.

- Dave Birch contends that interoperable identity systems thrive when multiple stakeholders adopt consistent rules.

- Dr Joy Buolamwini notes that consistent data and ethics standards help measure, compare, and mitigate AI biases across platforms.

- Cory Doctorow warns that without collective safeguards, individual efforts can be sidelined by more exploitative models. And yes, there are bad agents in the world.

How it works: Pathways to standardisation

Benchmarking & common metrics

- Else’s Trusted Interaction Score (TIS): From Article 4 (Measuring trust: Introducing Else’s Trusted Interaction Score (TIS)), TIS could become a baseline measure. If multiple companies adopt similar metrics, users can compare trust “at a glance.”

- Industry-specific benchmarks: For finance, focus on data security and user consent thresholds; for social media, emphasis on content moderation transparency.

Real-Life Example – LEED Certification (Analogy)

In architecture, LEED provides a global green-building standard. A building’s LEED rating helps stakeholders quickly assess environmental performance. A similar model for trust could do the same for AI-driven services.

(LEED stands for Leadership in Energy and Environmental Design. It’s a globally recognised certification system for sustainable building. Buildings and projects earn LEED points by meeting specific standards in areas like energy efficiency, water conservation, indoor air quality, and the use of eco-friendly materials. Depending on how many points a project achieves, it may receive LEED certification at different levels (e.g., Certified, Silver, Gold, or Platinum). Essentially, LEED serves as a benchmark for designing, constructing, and operating buildings in an environmentally responsible way).

Open standards & protocols

Sarah Gold advocates for open, user-friendly standards around privacy and consent. This might include:

- Consent tokens: Standard ways to verify, revoke, or update user permissions.

- Interoperable data formats: Ensuring users can port their data from one service to another, akin to Doc Searls’ VRM vision.

- API guidelines: Common protocols so third-party developers also adhere to trust principles, preventing “weak links” in the ecosystem.

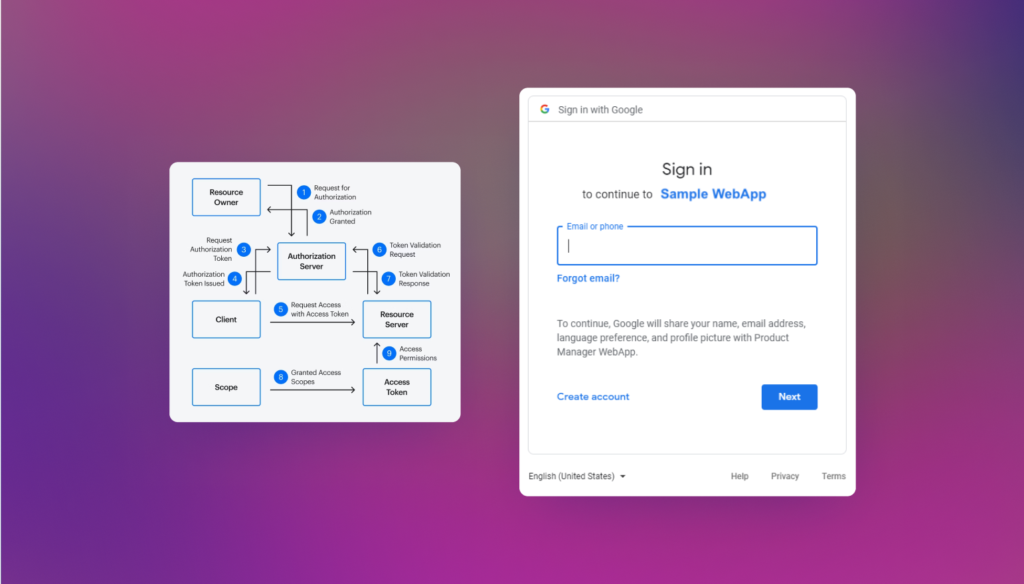

Real-Life Example – OAuth

OAuth is a widely adopted protocol for secure authorisation. While not solely about trust, it shows how an open standard can unify otherwise fragmented services. We said earlier that we were first excited (yes, really) by OAuth in the mid-noughties.

Cross-industry collaboration

- Working groups: Multi-company alliances to define best practices (e.g., a Trust in AI consortium featuring tech, finance, healthcare players).

- Joint R&D: Pool resources to tackle common issues—like algorithmic bias or robust identity frameworks. Dr Joy Buolamwini’s Algorithmic Justice League model could be extended to an industry-level AI oversight body.

- Shared auditing & accreditation: Companies might undergo third-party reviews against trust standards, publishing “trust reports” similarly to how banks publish solvency reports.

Real-Life Example – The FIDO Alliance

FIDO (Fast IDentity Online) unites tech giants to create open authentication standards (like biometric and token-based logins). By collaborating, they shape secure login experiences worldwide, demonstrating how cross-industry alignment can improve user trust.

Benefits and use cases

Smoother user experience

When trust features like dynamic consent, data dashboards, and AI transparency look and feel similar across platforms, users don’t have to relearn or guess how to protect themselves. Jamie Smith calls it a “universal user literacy,” where consistent design patterns reduce friction.

Reduced compliance overheads

Instead of each company interpreting regulations differently, standard guidelines cut down on confusion and legal risk. Dave G.W. Birch highlights that in finance especially, inconsistent approaches can slow innovation.

Raising the collective bar

By aligning on ethical norms, businesses can avoid a “race to the bottom” where less scrupulous players exploit users. Cory Doctorow emphasises that standardisation prevents exploitative models from dominating simply because they’re more profitable in the short term.

Risks and challenges

Sluggish adoption

Establishing and updating standards often involves large committees, which can be slow. Doc Searls warns that bureaucratic inertia might delay meaningful progress.

One-size-fits-all?

Not every industry or region has the same legal framework or user needs. A universal standard might risk oversimplification—Dr Joy Buolamwini reminds us that biases and local contexts differ widely.

Potential for ‘Fake compliance’

Without robust audits, companies might claim adherence without truly meeting the spirit of these standards—Sarah Gold underscores the need for genuine, user-tested compliance, rather than superficial certifications.

Comparison

| Fragmented Approach | Standardised Ecosystem | |

| User Experience | Inconsistent, confusing consent flows | Predictable, user-friendly patterns across apps |

| Innovation | Slowed by repeated reinvention of trust features | Freed to focus on product improvements rather than re-solving basics |

| Risk of Exploitation | High if some players cut corners | Lower overall; strong standards reduce “cheater” advantage |

Wrapping up

Moving “towards standardisation” isn’t a pipe dream; it’s a tangible strategy for elevating trust across industries. From Projects by IF’s open consent frameworks to Doc Searls’ VRM protocols, visionaries recognise that collaboration amplifies impact. Dave G.W. Birch points to consistent identity and data handling as a foundation for thriving digital services, while Dr Joy Buolamwini’s focus on bias highlights the need for communal oversight. Jamie Smith and Cory Doctorow equally stress that ethical, user-centric models can be more than competitive—they can transform entire sectors.

The overarching message? Trust is a team sport. By uniting on shared metrics, open standards, and cross-industry alliances, we can build a digital future where user empowerment is the norm—not the exception. And as standardisation becomes a competitive differentiator, everyone benefits: organisations, regulators, and, most importantly, the users themselves.

TL/DR

- Standardisation’s Role: Common guidelines and metrics enable consistent trust practices across platforms.

- Examples: Borrow from LEED certifications, OAuth, and the FIDO Alliance to show how shared standards can unify industries.

- Benefits: Smoother user experiences, lower compliance overheads, and protection from exploitative players.

- Challenges: Bureaucracy, one-size-fits-all limitations, and “fake compliance” if audits aren’t robust.

- Your call to Action: Participate in or initiate cross-industry working groups, adopt open protocols, and champion a collaborative approach to trust. If you are interested in collaborating and providing some centre of gravity together with Else, let us know.

Some questions

Q: Does standardisation stifle competition?

A: Ideally, no. It sets a baseline for user protection, freeing companies to differentiate on advanced features, performance, or innovation.

Q: Which organisation should spearhead these standards?

A: Likely a consortium of industry stakeholders, regulators, and user advocacy groups, similar to W3C or the FIDO Alliance.

Q: Could universal standards ignore local or cultural nuances?

A: Standards must be flexible enough to accommodate regional laws and cultural values. Sub-frameworks might adapt universal principles to local contexts.

Q: Is there a risk that standards become outdated quickly?

A: Yes. Continuous updates, guided by evolving tech (like new AI advances) and user feedback, are essential to keep standards relevant.

Final note

Standardising trust practices empowers both businesses and users, reducing fragmented data policies and ensuring consistent user control across platforms. Guided by the collective insights of Sarah Gold, Dave G.W. Birch, Doc Searls, Dr Joy Buolamwini, Jamie Smith, and Cory Doctorow, the path toward open standards and cross-industry collaboration can solidify a more ethical, user-centric digital ecosystem—one that genuinely benefits everyone involved.

At ELSE, we combine deep industry insights with practical design expertise across loyalty (O2, MGM, Shell), identity (GEN’s Midy), regulated sectors (UBS, T. Rowe Price, Boehringer-Ingelheim, Bupa), and AI innovation (Genie, Good.Engine, plus our own R&D).

Drawing on this broad experience, we’re uniquely positioned to help organisations embed trust, transparency, and user-centricity into digital product and service delivery—ensuring that the future of AI-driven experiences remains both ethical and commercially compelling.

Interested in learning more about designing for Trust?

This is the sixth article in a six-part series exploring emergent concepts around trust and AI—vital considerations for anyone responsible for digital product/service delivery and innovation. Across these articles, we delve into frameworks, real-world examples, and thought leadership insights to help organisations design user-centric experiences that uphold privacy, transparency, and ethical autonomy. Whether you’re a designer, product manager, or executive, these perspectives will prepare you to navigate the evolving intersection of technology, regulation, and user expectations with confidence and clarity.

Else briefing

Don’t miss strategic thinking for change agents

One more step

Thanks for signing up to Else insight

We’ll only email you when we release an update.

Please check your email for a confirmation link to complete the sign up.

You’re all set

Ah! You’re already a friend of Else

You won’t miss any of our updates.

In the meantime, please enjoy Our Insights

Shared perspectives

Don’t miss strategic thinking for change agents

Find ideas at your fingertips with Else insight.

Here to help

Have a question or problem to solve?